Ghost in the Mirror

So in an earlier episode I talked a bit about my project for hosting a project/resume site for my stuff, here I'll go into a bit more detail.

My objectives were to have a quick, CDN capable responsive site that I could just pop my thoughts onto, and then have it served up easy and in a modern, good looking style with minimal upkeep after getting it running. Kind of like a diary, where I can just sip on my coffee and throw stuff down whenever I get some free time to document thoughts, projects, ideas, opinions on pineapple on pizza, etc.

"Which way did he go George?"

There are tons of options available for that, so narrowing it down further, I like sticking to open-source projects for the street cred. I also like self-hosted because it lets me dig in the guts of the system if need be, and learn more about the how's and the why's.

After perusing various options (and there are LOT), including my old go-to Wordpress, I came across Ghost CMS which is pretty light weight (ie fast), responsive, supports headless mode (might come in handy?), and markdown editing support (I started using this a lot with Obisidian - more on this in a later post).

It also had a working front-end that didn't require me building one out in JS, which is a bit more work. I'm admittedly weak in my JS though I am learning as I go. It's got tons of other features but these ones are critical for me.

Ghost is also something I could host locally and push to my iceyandcloudy domain, as it is able to flatten which I can host on S3. This allows me get more familiar with AWS's offerings (which is another one of my broad objectives).

Running in an AWS enabled site removes most of the issues with self-hosting and provides a reasonably priced CDN for fast delivery.

Ok let's do this!

So interestingly enough, my Portainer server had a ready-to-use Docker image template available to get a GhostCMS instance up and running with a click.

I was able to jump on the local instance and get crackin' on the design preferences, familiarize myself with it's interface, and actually have a working site in less than 10 minutes.

Now that's what I'm looking for!

Ok, next to-do! Let's get this puppy flattened out and hosted on S3!

How do dat?

Uhh let's see here. Can't host a JS server in S3, can't pull data actively from the local site without using another server but I don't want to run an EC2 or ECS instance and all of that infrastructure.

So how do I make a working mirror of my local site and mimic it on S3? I need it to be repeatable and scriptable so it can run either on a cron timer, pulling updates daily and pushing them up to S3, or on a trigger after every post. So something CLI based. Ok!

For now let's do cron as I know how to get that up and running quick. Ok! Back to flattening. Uhh. Google-fu to the rescue!

There are a few options here, wget, httrack, Ghost Static Site Generator, Next.JS+Ghost, HUGO+Ghost, Sunny D, the purple stuff. Let's try the Sunny D! I mean, let's try GSSG as it is geared towards exactly what I want to do!

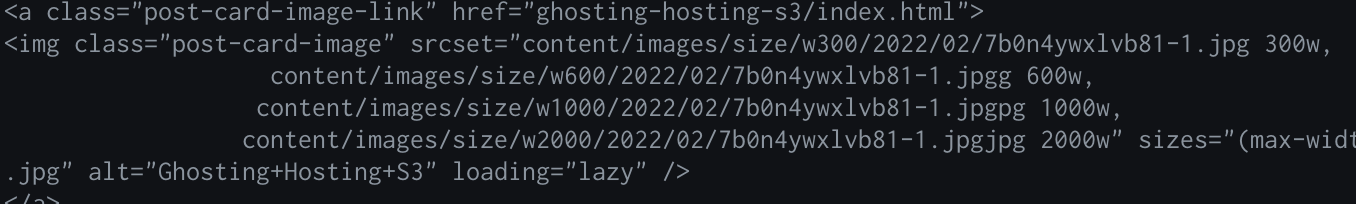

So after pulling from the Fried Chicken Repo and following the directions, I was not able to get the damn thing to run correctly. Not in my main dev server, Node docker, or Macbook. It would only run with default params and not accept my flags and options, spitting out either 404 errors or partially cloned sites. Looking at the source files, it appears to just use wget as the mirror app and then run it through some filters to get everything lined up, then output locally for viewing.

Ok, next option! Ghost + a general site mirroring app!

Lets start with wget and see how it compares, since I use wget a lot for grabbing stuff off the net.

wget -E -r -k -p https://mysite

Runs well! Let's see how it looks with python3 -m http.server.

Let's dig a bit and see what's going on here.

For some reason wget turns these jpg filenames into mutants. Horrible scourges of nature, never to be shown to the unwary public.

Ok, next option.

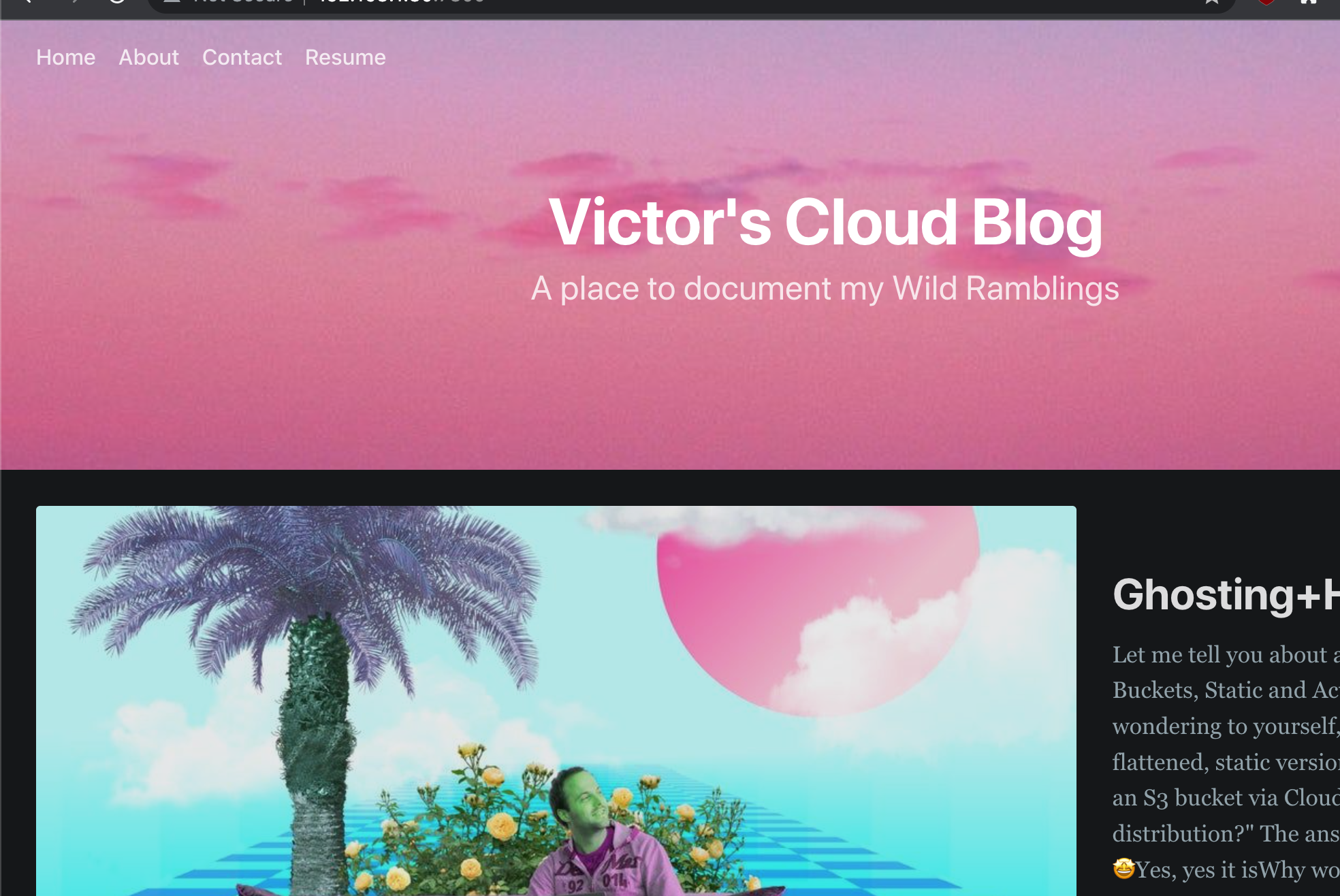

Httrack, though old, still seems capable of mirroring sites effectively and can run from CLI. It's also available via distro so it's as easy as running apt install, and after a quick read I was able to get a mirror version of the site available for browsing with python3 -m http.server. Neat!

httrack -Q -q https://mysite

So next steps are to stand up an S3 static site, set up permissions and IAMs, upload files, then expose it to CloudFront, connect to Route53 and add cert, then expose that end point. Silky smooth!

Ok, now let's set up a pipeline to mirror and publish page updates, so eventually we can have it check daily (or even better, trigger an operation on post updates).

For this part I am using GitHub actions as I already have my GH connected to my dev laptop + VSCode. I'm lazy, ok? Is that what you want to hear? Oh, also I know it's very functional with lots of extensible support, including AWS Actions which can sync changes from my GH Actions environment to me buckets, complete with secrets in envvars.

Clean, real clean, like my pants is.

So next up I built a dev S3 bucket and a GHA pipeline to work as follows:

On push to dev branch trigger

- Run httrack on GH Actions to mirror local server Ghost site

- Connect GH Action mirror to dev S3 bucket and sync changes

- Run invalidation on CloudFront to ensure fresh cached version of changes

From there I can connect to my dev bucket and check everything out.

name: Upload to Dev S3 Website Bucket and Invalidate CF Cache

on:

push:

branches:

- dev

jobs:

deploy:

runs-on: ubuntu-latest

steps:

- name: Install httrack

run: sudo apt install -y httrack

- name: Checkout

uses: actions/[email protected]

- name: Mirror ghost site

run: httrack -Q -q https://mysite

- name: Mirror complete

run: echo "Mirrored and ready to sync to dev"

- name: Configure AWS Credentials

uses: aws-actions/[email protected]

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID_DEV }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY_DEV }}

aws-region: us-west-1

- name: Deploy static site to S3 bucket

run: |

cd mysite

aws s3 sync . s3://my-bucket --delete

- name: Invalidate dev CF Distro

run: aws cloudfront create-invalidation --distribution-id me-distro-id --paths "/*"

Everything's working like it's supposed to, so I add the changes to main.yml workflow, adjust it to reflect AWS resource changes for main bucket stuffs, then merge into main branch and have it run the actions making available on my main site (you are here).

And voila! A Static Generated version of a Ghost CMS site hosted on S3, with CloudFront CDN speed and reliability! That's mad fresh.

Next steps, add cron timer to check for updates daily, or figure out how to run a trigger if I'm feeling extra-motivated!

Total Project Time: about a week on and off.

Total Project Satisfaction: like, 1000 or more!